How do we measure Engineering Productivity

Published

Apr 3, 2025

In engineering, there is no perfect measure for productivity. There are plenty of articles that discuss why traditional productivity metrics are flawed.

Beyond Lines of Code: Measuring Engineering Productivity with AI

In engineering, there is no perfect measure for productivity. There are plenty… of… articles… that discuss why traditional productivity metrics are flawed. Lines of code reward verbosity, commit counts incentivise small, meaningless changes, and story points are notoriously inconsistent across teams.

We took a different approach. Instead of trying to fix broken proxies, we built an AI-native system that estimates the actual effort and complexity behind every merged Pull Request.

There is no perfect measure for productivity, but we pride ourself on being as close as possible to the “reality on the ground”.

A quote from one of our customers :)

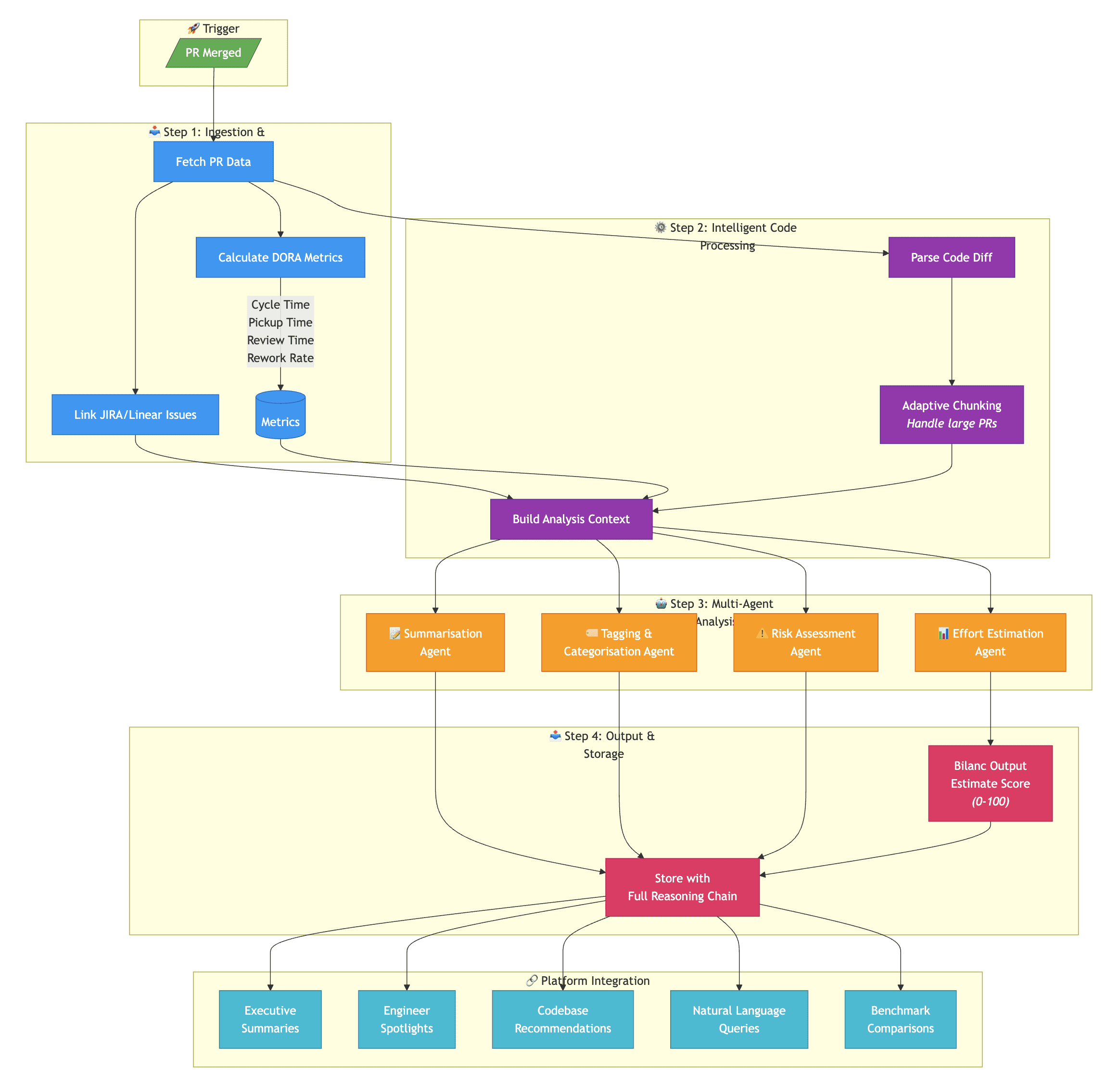

When a PR is merged, we trigger an asynchronous workflow that analyses the code changes in depth. Our system doesn't just count lines - it reads the actual diff, retrieves codebase context to better understand the change, and assigns an output estimate score between 0-100 based on the cognitive effort required to produce that work.

This score becomes a "unit of work" that's far more consistent than traditional metrics. A 500-line boilerplate file and a 50-line algorithm optimisation are not treated equally - because they shouldn't be.

The Technical Pipeline

Step 1: Ingestion & Metric Calculation

When a PR is merged, our data pipeline kicks off. We calculate a comprehensive set of >50 DORA-aligned metrics:

Total Cycle Time: First commit to merge

Coding Time: First activity to PR creation

Pickup Time: PR creation to first review

Review Time: First review to merge

Rework Rate: Ratio of reworked lines to total changed lines

We also pull context from JIRA and Linear, linking issues to PRs via branch name matching. This gives us visibility into the business intent behind technical work.

Step 2: Intelligent Code Processing

Large PRs can contain hundreds of files and massive amounts of code. We use agentic search techniques to handle codebases of any size - from one-line hotfixes to massive refactors spanning thousands of files - without sacrificing analysis quality.

Step 3: Multi-Agent Analysis

We decompose our analysis into specialised AI agents:

PR Summarisation Agent: Generates an executive summary of what changed, why, and the impact

Tagging & Categorisation Agent: Assigns semantic tags and classifies work into categories like, Feature Work, Bug Fixes, or Tech Improvements

Risk Assessment Agent: Identifies specific technical concerns - security vulnerabilities, missing error handling, performance issues etc.

Effort Estimation Agent: Calculates the Bilanc output estimate score with transparent reasoning

Each agent is independently optimised, versioned, and evaluated. This modularity means we can improve each agent, and add new capabilities without rewriting our entire system.

Step 4: Transparency

Every PR analysis is stored with its full reasoning chain. When a PR receives an output estimate of 45, users can see exactly why: "Implements new routing architecture with public/private layouts, polling logic, and multiple report type handlers." This isn't a black box - it's explainable AI.

Deep Dive: The Bilanc Output Estimate

The output estimate score is our most nuanced metric. To ensure consistency, we use explicit scoring anchors calibrated across thousands of PRs.

The Scoring Scale

Score Range | Category | Examples |

|---|---|---|

0-15 | Trivial | Typos, CSS tweaks, version bumps, comment updates |

16-30 | Simple | Using existing patterns, isolated fixes, type updates |

31-45 | Moderate | New features with existing patterns, multi-file refactors, standard API work |

46-60 | Complex | Architectural changes, multi-system features, security-critical work |

61-80 | Very Complex | Major features with architectural impact, cross-cutting system changes |

81-100 | Exceptional | System rewrites, fundamental infrastructure overhauls (extremely rare) |

Fighting Score Inflation

We include explicit guardrails to reduce score inflation:

Location ≠ Complexity: Touching auth code doesn't automatically make something complex - we assess the change, not the file path

Pattern Repetition: Applying the same change across multiple files is still simple, not moderate

Existing Helpers: Refactoring to use existing utilities is simple work, regardless of the number of files touched

Calibration & Customization

Repository Calibration: Every codebase is different. A score of 50 in a mature monolith represents different work than a 50 in a scrappy startup codebase. Our benchmarking system calculates percentiles per repository, so "typical work" always centers around 50 for that specific context.

Org-Specific Customization: Engineering cultures vary. Some organizations value documentation highly; others have strict definitions of "architectural" work. We support system prompt customizations to ensure scoring reflects your team's specific values.

What the Score Is (and Isn't)

The complexity score measures effort required to produce the change - not value delivered, not impact, not individual worth. A critical one-line security fix might score 15 (trivial effort) while being the most important PR of the quarter.

We aggregate these scores to show trends: "This engineer averaged 23.8 points per day—from merging 2-3 small PRs or 1 medium PR daily." This is useful for tracking relative output over time, not for performance reviews.

Integration: How Data Flows

The productivity data flows through our entire platform to provide actionable insights:

Executive Summaries: AI-generated briefings for CTOs and engineering managers, highlighting key accomplishments, concerning trends, and areas needing attention

Engineer Spotlights: Automated recognition of high-performing contributors with specific examples of impactful work

Codebase Recommendations: Actionable suggestions based on patterns observed in recent PRs, with historical tracking to surface recurring issues

Natural Language Queries: Ask questions like "Which engineers had the highest rework rate last month?" and get instant answers

Benchmark Comparisons: See how your team compares to industry percentiles across all metrics

How We Validate and Improve

Building an AI system is easy. Building one that is reliably accurate is hard.

Manual & Automated Evaluation We maintain an internal dataset of manually annotated PR-Summary-Tags-Score tuples. We augment this with an LLM-as-a-Judge framework, where a separate model grades the outputs of our agents on deterministic tasks like code categorization.

Continuous Iteration Because our agents are modular, we can A/B test different approaches per agent. If we change a prompt for the Risk Assessment Agent, we measure the impact on that specific capability without conflating results across the pipeline.

What’s Next?

We are actively developing:

Graph-based retrieval: Understanding how changed components connect to the broader system architecture.

Static analysis integration: Using Abstract Syntax Trees (ASTs) to identify breaking changes and dependency impacts.

Historical pattern recognition: Enriching analysis with patterns from previously indexed work.

Appendix

Bilanc AI Productivity Workflow

Samuel Akinwunmi

Founder, Bilanc